WHAT YOU THINK ABOUT SECURITY, ENCRYPTION, AND SECRECY!

| April 12, 2016

Thanks to those of you who took part in our little amateur survey. We got almost 500 responses, which is more than some political surveys! But we can’t claim that these results are in any way representative or scientific—to do that we would have had to assure that the participants were randomly spread out by income, location, gender, age, etc. That said, it was still a fun exercise and yielded somewhat informative results.

We’ve made pie charts out of the results, which you can review below along with some thoughts about the outcomes.

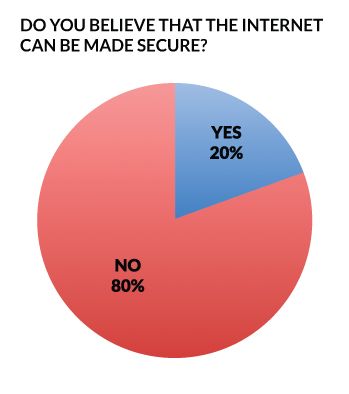

As per the above, folks don’t have much faith/hope that the internet can be made secure. I expressed my doubts, too. The very structure of the thing gives it a bias toward sharing and transparency. This is, we generally agree, a good thing if one is talking about heinous governments hiding their activities, malicious agencies hiding their activities, and bankers or others hiding their wealth and investments in order to avoid paying taxes, to list a few examples. The rise of leaks has had huge, and I would say mostly positive, effects—see this New York Times article. Some of the leaks happen because it’s hard to really, truly secure information, but much of it is due to the actions of brave individuals and whistleblowers.

This lack of faith in the ability to make our information secure doesn’t bode well for the continuation of business, trade and commerce on the web. As mentioned in my original post, trust is something the tech companies are keen to re-establish (while they continue to hoover up our data!).

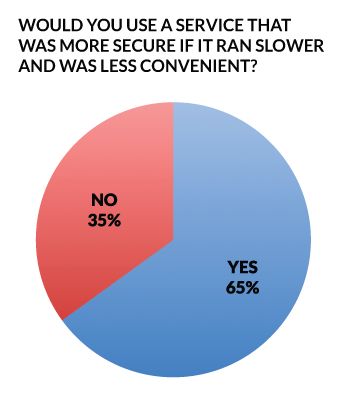

Despite the lack of faith, we dream on—folks would, by about 2 to 1, sacrifice speed for security, even while most responded to the previous question that they don’t think that security is even possible. Hope and dreams don’t mix with reason all the time. So, there seems to be a market for security, even at a price. If we mostly want more security and privacy, as it seems we do, then will we want to stop having our data sucked-up and movements tracked by big companies? Hmmm.

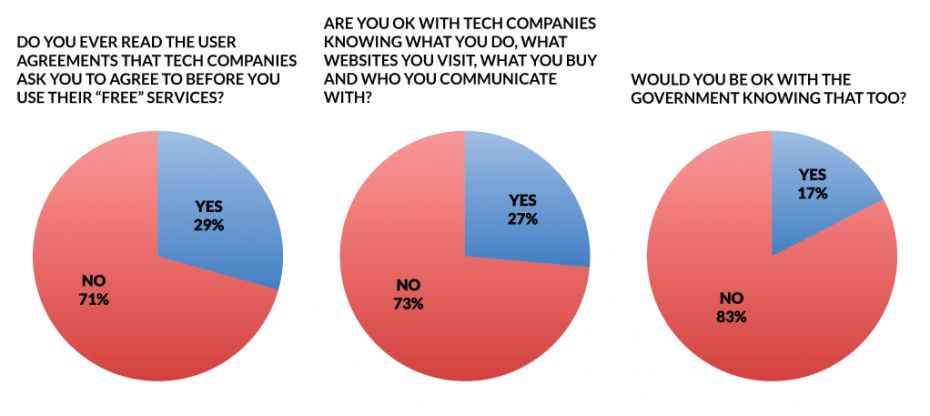

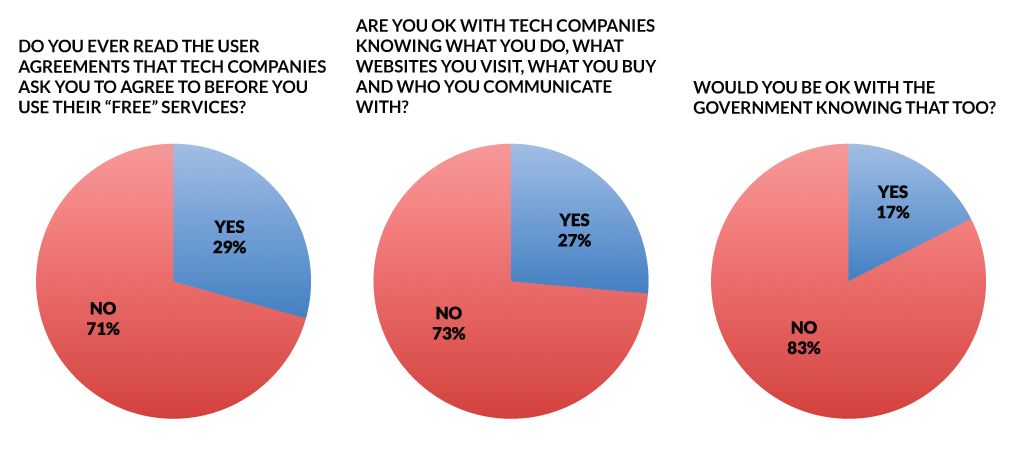

I’m surprised that almost 30% of responders say they have read the insane and long user agreements that we generally click “agree” on. These are intentionally lengthy and dense in order to discourage clarity and understanding. Generally giving consent allows tech companies to track us and gather our data, some of which allows them to provide us with recommendations or add convenience in other ways—and is often sold to advertisers so that they can better target us. Overwhelmingly respondents aren’t cool with tech companies tracking our movements online. Respondents aren’t cool with the government doing it either which is not a surprise, although almost 20% are fine with government knowing what we do. So, there’s general dissatisfaction here—distrust of the government AND the tech companies.

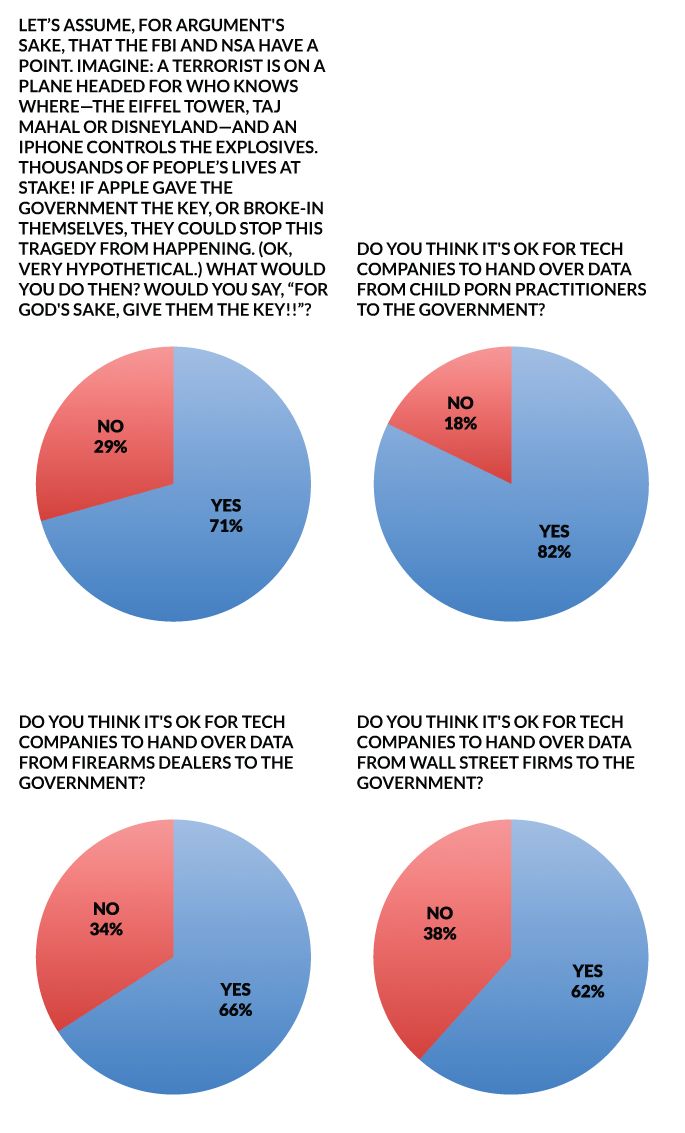

This was a trick question—a moral dilemma. As with lots of moral dilemmas, there often comes a point where we reverse our stance, when we might sacrifice someone so that many more might live, for example. Though most of us would prefer security and privacy, there are specific cases where we might make exceptions. A threat that involves many lives, for sure. Child porn, wall street firms—both easy yesses. Firearms dealers, hmmm, not quite as easy, but about twice as many said yes, which I’m guessing is based on an assumption that much of that business is not adequately monitored and some is just plain illegal.

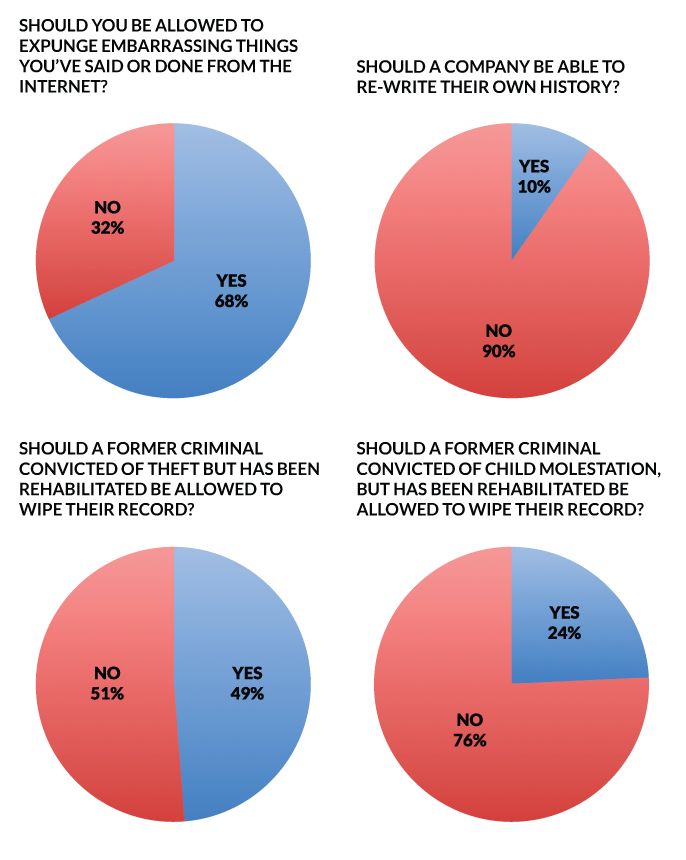

Here’s an interesting set. Folks voted for being able to expunge old information and data about people but would NOT apply the same rule to corporations. This could be the way we framed the questions—“rewrite their history” sounds a bit more dubious than “delete embarrassing things”. But it’s interesting that we DON'T want corporations treated as if they are human beings, something that has legally been in effect for the past six years. As with the moral dilemma above, there are exceptions. Wiping criminal records online was split, and when the intentionally hot topic of child molestation was used, folks overwhelmingly said NO!

So there you have it—some very strong general feelings about not wanting data gathering or access to it on everyone, but quite a few exceptions, too. How to reconcile this? How to legally and morally determine where that line is?

Since we sent out the survey, the F.B.I. found a third party to crack the security code and canceled their hearing against Apple—and, of course, there has been a lot written about it. Here’s a link to The New York Times opinion piece published by Jamil N. Jaffer and Daniel J. Rosenthal that explains why they think that Apple’s decision not to help the F.B.I. was in fact bad for its customers, as now the government and the company that assisted the F.B.I. in cracking the key (and potentially other hackers) know of a security vulnerability that Apple is not even aware of itself.

Many of you had ideas about how this complicated issue might be dealt with, which you posted in comments on our Facebook page and website, and we’ve included a selection below. Some very thoughtful suggestions and opinions, some standard lines, but lots of very original thinking too—very inspiring!

DB

New York City

Here's a selection of comments made in response to the original article

Security, Encryption and Secrecy: Take a Survey and Find Out Where You Stand

[bold emphasis our own]

Cile Stanbrough: Personally I think we need laws in place where both tech AND the government need to be warranted to access information from the private sector and that any breech of security needs to be accounted for just like any access to personal space under law. If the public wants to pursue it they then have recourse. That said, I don't think it prudent for anyone to share anything on the internet that they don't expect may one day day be exploited. I think our understanding transparency is the real issue here that we are all grappling with along with defining what is the cost of safety.

John Irvine: The key for me is a warrant. If there is probable cause that is signed off on by a court, then I am more inclined to allow information to be shared for criminal investigations. What Snowden showed is that there was a hell of a lot going on without specific warrants, or under supposed blanket warrant authority (which is a bit of an oxymoron.) And re the Apple case: if they have a way to access the info currently, without developing new technology, then they should give it, assuming there's a warrant. If not, then the government asking them to develop new technology to gain access is not necessarily even possible. What would then be out of bounds? Could they require a company to create a time machine to allow the FBI to go back and solve murders? It's like in Chitty Chitty Bang Bang where the King of Vulgaria locks his wise men up until they invent a flying car.

Ambrin Decay: This was incredibly well written. I found the survey somewhat problematic. I find that accumulating a lifetime's worth of data and algorithms to project the future outcomes of individuals, or create institutional, and automatic bias to be a systematic cast system. I do believe in rehabilitation, and that your record should follow you for a period of time, but not a lifetime. I also find the projection of recidivism incredibly problematic, especially if it's becoming table discussion on the topic of incarceration and rehabilitation. While these algorithms might be correct, it also stagnates upward movement. Again, creating a systemic cast system, fully integrated in our lives based on personal preference, associates, academia, personal wealth (more likely, lack thereof), and various other demographics. Allowing this data to be collected directly contributes to the very creation of a cast system. And of course, Big Brother is always watching, along with every site you use, purchase you make, companies you subscribe to, phone numbers, emails, addresses, affiliates, and so forth. All being collected under the guise of security and convenience, and handed over willingly by ourselves or whatever service we have chosen to use. I find data collection a very complex issue. While I believe in exceptions to privacy, I believe there is a correct way to achieve that; warrants and proper legal channels. But ultimate transparency and surveillance is a slippery slope into a multitude of invasive policies, rights violations, and marginalization. And if the government believed in transparency, Snowden, well... There is no two way street here, now is there?

Julie Scelfo: Mr. Byrne, you raise a lot of important points. My vote would be a return to the FISA court system. It wasn't perfect, but it provided a much-needed safeguard in instances when the U.S. government wishes to spy on to own citizens. And as you may be aware, European courts are several steps ahead of the U.S. in confronting "Right to be Forgotten" laws. Here's something I wrote about balancing personal privacy not with data but with first amendment right to free press (as it pertains to celebrity).

IT Lab Consulting: Some information as a former Apple Employee/Computer Technician. The FBI hasn't requested data. They requested Apple provide them with an entry point into the software. Something Apple has refused to do historically. Apple hasn't engineered a crack for their own encryption and don't have access to any of that data. If Apple provided the FBI with a vulnerability in the OS, that vulnerability can be reverse engineered and spread via coding some form of malware. We've seen US governmental agencies use malware before in dealing with perceived threats. Particularly recently well covered, in regards to certain foreign powers developing nuclear reactors and a viral software designed to brick the fragile computers that control them. That one spread and causes some unforeseen issues. To sum, the FBI isn't going to release the device to Apple to crack because it's evidence in an investigation. They want a back door. This is a rational recuse to try to force Apple to provide one. Back doors are insecure. It's creating a software with vulnerabilities there that are meant to be exploited. This wouldn't just make it easier for the FBI to get the data they want. It makes it easy for any hacker who wants the data as well. This isn't even factoring in the fact that the current director of the FBI wants to ban encryption in the private sector altogether. Effectively making everyone able to see everyone else's data. Hackers don't encrypt, use digital slight of hand to make themselves appear to be a trusted member of a network, they decrypt and exploit data, to gain access to your personal information. And they're good at it. While the FBI has good intensions behind this specific terrorism case, they really don't want any privacy screens between the populace and the executive branch of the government. That could potentially lead to some form of statist Minority Report sort of spying and behavior predicting and profiling prior to actual criminal activity, undermining our system of due process. It is complex. And I don't have a good answer. But potentially giving a government the proverbial keys to the kingdom of personal privacy isn't the answer.

Todd Crawshaw: I wonder how many people who upload personal data to "the Cloud" would do so if it was called by its correct name - "someone else's computer".:

Anthony Biggs: DB, just adding fuel to a smokescreen with this survey. The need for "security" is a consequence of fear, of distrust and from there, there is no place for openess. Is that really the question DB

Denis Mailloux: Most of the questions I could answer yes and no. How can I stop going on internet in 2016 when there are so many things going on there. But as time passes, there are less and less truss online. When I take the bus I have to truss the driver. When I eat I must trust the grocery store, haircut: the barber, learning: the teacher. With internet, large companies and most governements, we kind of loose humanity and in the same way, our own desiry or responsability of being ourself thrustworthy.

Dillon Fries: If it's a matter of Nat'l Security (and not farce driven by fear) can't Apple (for example) just hand over the data for the one account? Maybe I'm missing something, but it sounds like the debate is access for all or none. There has to be a conviction or cause to invade someone's personal property. I don't see the issue. As for civilian internet privacy/rights... I agree with what someone else already said, don't want it online don't post it online. That said, the exploitation of mugshots should not exist. Qualified people can't get a job b/c of that. Doesn't matter if they were convicted or not, a false arrest, etc. It undermines the entire democratic justice system that is in place. The bottom line is people see that and now you are on the wrong side of history even in a completely corrupt gov't. Allowing people to exploit public records has so many harmful domino effects on society. And we also need trained "cyber police" to hold people responsible with real consequences for their behavior on the internet. (ie harassment, slander, bullying)

Gordon Baxter: Back in 2004 Mick Farren wrote that the people we should really worry about are Google, more than the NSA... turns out he was right…

Stephen Willcock: Really good read. Next chapters might explore the same issue outside the USA's territorial borders. We know of some restrictive controls used abroad but are there others who provide freedom from surveillance?